Today's Blog (#48) is about the misuse of statistics - a subject close to my heart. Misuse happens in getting data, crunching data and presenting data. This is the final blog on gathering information.

'Show me the numbers', used to be a phrase that typified the first part of my career. Numbers it was thought, cut through the weasel words that people use to frame an opportunity as better than it was. Rest assured though - through incompetence or outright skullduggery statistics are often not as innocent as they seem. People with incentives to persuade you, will find statistics that suit their case and people who want to believe something will not interrogate statistics that allow them to keep believing.

Getting data

We all love a poll but polls are often some of the worst offenders of misleading statistics.

Low sample sizes often mean that it does not reflect the broader public

How the poll is conducted makes a huge difference to the demographic you reach. Many polls are conducted by calling landlines during the working day! Can you imagine the skew of people you would get if that's the way you are reaching them.

Are the findings reweighted or not? Reweighted statistics attempt to adjust for sample biases, but some do not.

Is the question fairly framed? 'Do you trust the Conservative party to govern well?' will elicit a different response from 'Do you trust the party of partygate, multiple breaches of the ministerial code, failed Brexit policy and Liz Truss to govern this poor country well?'

The problem with data science (a little rant)

Much of data science is not science at all. Aside from the incorrect assumptions and applying statistical techniques to situations where it is not justified, my biggest problem with much of data science is that it pretends to take dodgy data and make rigorous takeaways from it. However flashy your data crunching is, if you start with poor data you cannot safely make good insights.

Crunching data

At any time, there is at least one statistic suggesting we will go into recession soon. Many doom and gloom economists will focus on this one statistic and generate lots of engagement from others who also want to believe this. This is an example of data fishing.

Data fishing or (data dredging) is when you take in loads of data, and then try and mine for relationships. The problem with this is sometimes you can come across relationships that have no intuitive basis.

The chart below shows that the relationship between US spending on science and certain types of suicide for a certain period has a correlation of nearly 100%. There is no intuitive basis for this conclusion but it does exist within the data. There clearly is little that these two stats have in common so its a coincidence that they have a strong correlation.

Often people looking for a trading strategy overfit a backtest with the strategy not performing as well in the real world.

Presenting data

There are more clangers on presenting data once gathering and crunching of data has been done. I will focus on two.

Misrepresenting data to make it more eye-catching is a sport. An example is medical research on mice. Much of this research e.g. the impact of low fat diet is a prelude to a human trial. However, the findings of an experiment on mice, is often reported as if the finding was for humans (e.g. "low fat diets cause weight gain"), because people would not be interested in reading about an experiment on mice. Many results that are derived from mice do not replicate in humans so it might all be untrue.

My other favorite is the 'dodgy chart'. This is a chart that has been doctored to more aggressively show the stat in question. Sometimes this is done by playing around with the time period, sometimes the axes. The example below makes it look like the Times had more than double the readership of the Telegraph, but when you look at the y-axis you can see they have a very similar readership.

So what?

How is this all relevant to decision-making? Here are three take-aways I want to leave you with before we pick it up next week:

1) Statistics should be assessed before being used. There are huge incentives to find stats that are eye-catching or persuading. The key cornerstone is good data gathering. Nothing good (evening with clever data science) can be derived from bad data.

2) When data is crunched make sure there is a basis for any relationships and there hasn't been overfitting or data fishing. Data crunching is to help you establish real world relationships not just fit your sample.

3) Data is so frequently misrepresented and spruced up that it takes a critical eye to know when something very minor is being sensationalised.

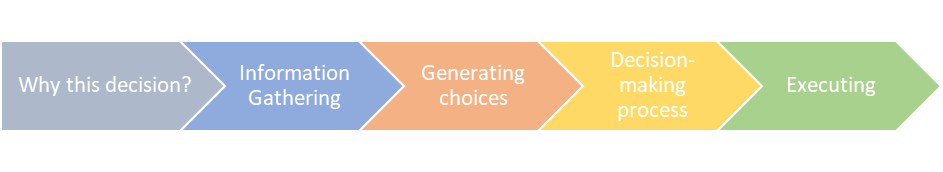

Thank you for joining. Next week - 'What are my choices?'. Don't forget to sign up to the subscription list.

Other blogs in the 'Anatomy of a decision series'

Comentários